〈 HOME

SCOPUS 등재 국제저널 JICRS, 논문 게재-김도희

2025.10.01

·

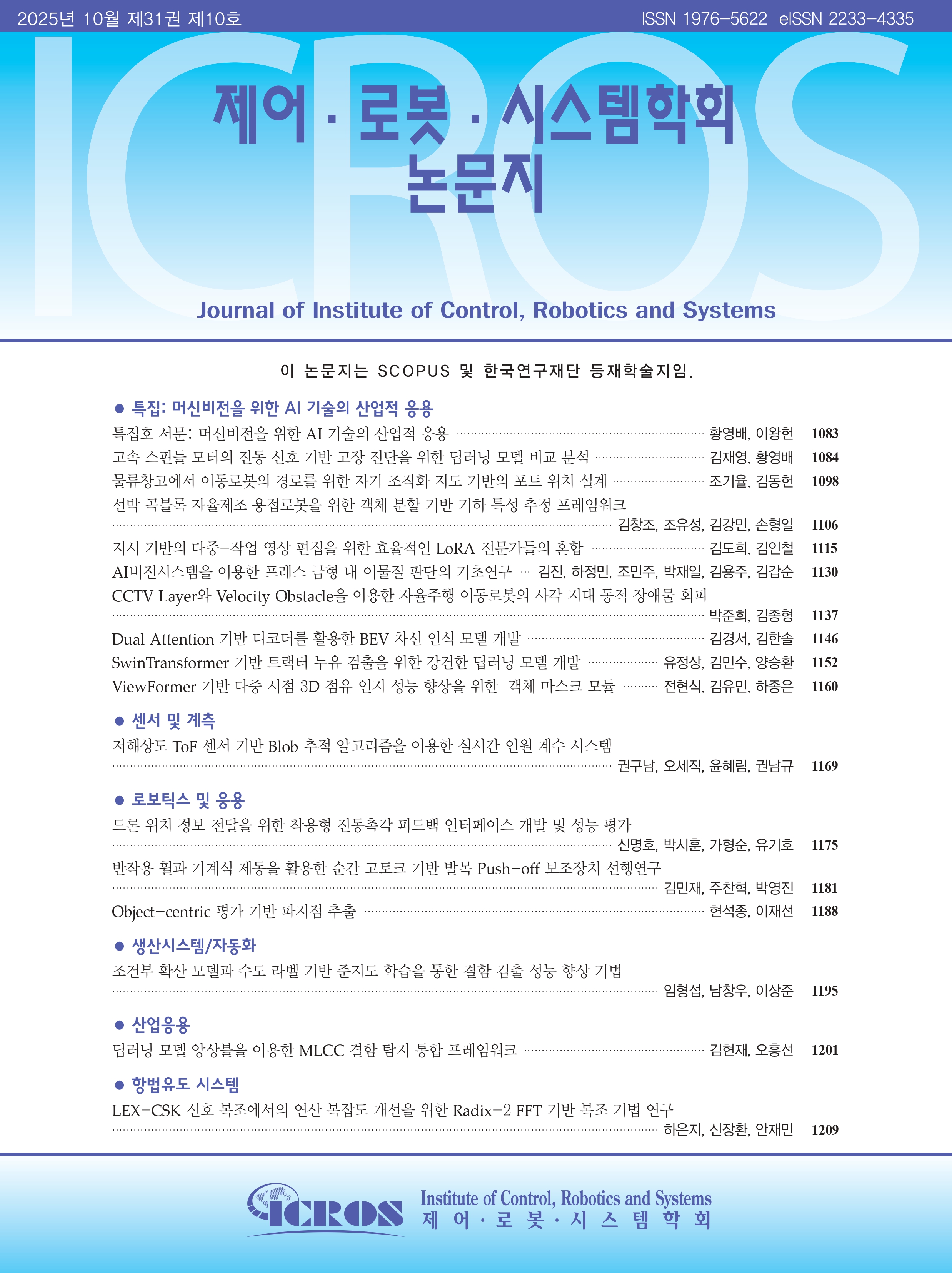

저널 : JICRS(Journal of Institute of Control, Robotics and Systems), Vol.31, No.10, pp.1115-1129, 2025. 10.

·

논문제목 : "An Efficient Mixture of LoRA Experts for Instruction-based Multi-task Image Editing"

·

저자 : 김도희, 김인철

·

요약 : This study proposes MOLIE, a novel instruction-based diffusion model for domain-specific task image editing that integrates a mixture of LoRA experts. To enhance efficiency and scalability, MOLIE combines the LoRA fine-tuning scheme with a Mixture of Experts (MoE) architecture, where individual LoRA modules are specialized trained for image editing operations such as object addition, removal, replacement, object change, cartoonization, and image deblurring. During fine-tuning, each LoRA expert module sets up its parameters for only the U-Net up blocks, reducing computational cost and enhancing image quality. A novel loss function incorporating structural loss is employed to train the gating function, enabling effective coordination of tasks among multiple LoRA experts. Model performance is evaluated using a novel Instruction-Image Alignment (IIA) metric, derived from prompts to a multi-modal large language model. Extensive quantitative and qualitative experiments on a benchmark dataset demonstrate the superior performance of the proposed model.